Upgrades: How do we handle future upgrades when needed?ĭAG development: How easy is it to develop or iterate on DAGs? Can we test any component locally before pushing it upstream?ĭAG deployment: How do we propagate updates to different environments? What are the artifacts we need to generate to identify a single release candidate?įeature flags: Can we hide certain features from certain deployments? Can we override environment variables easily? Visibility: How easy is it for us to view failed jobs? The current status of a job? How often a job is retired? Compute analytics about our job runtimes?

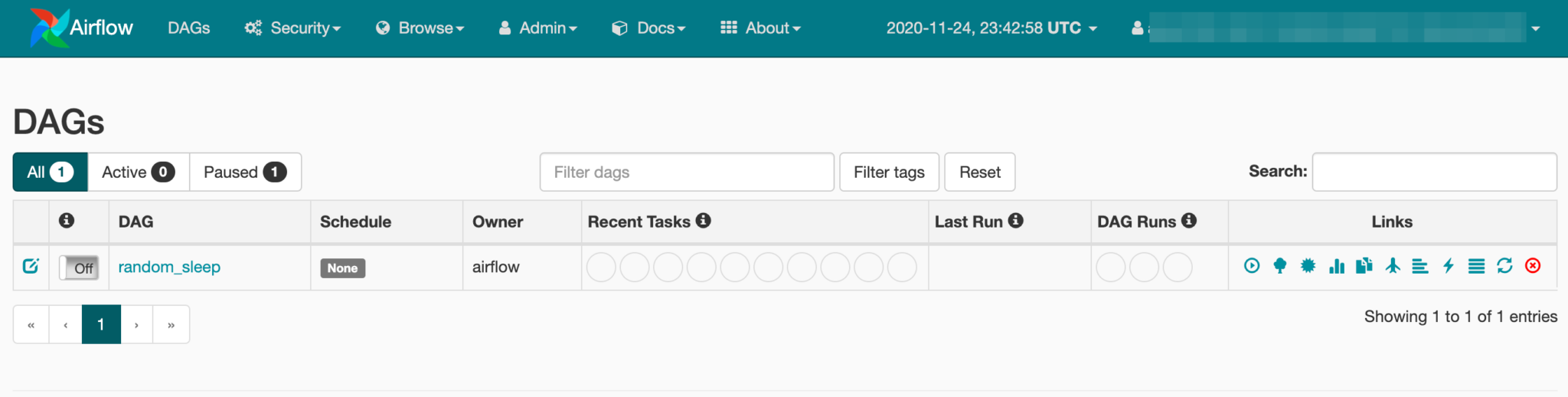

In order to ensure that we picked the best possible approach for our use case, our team identified a few key components which we wanted to focus on, please note that for your team the components may be different: Kubernetes using a common helm chart: essentially a repeat of our Airflow v1ĪrgoCD Workflows: we already utilize Argo to manage various CI/CD pipelines, so this seemed like an easy pivot to make Our team looked at three different migration paths:Īmazon Managed Workflows for Apache Airflow (MWAA): a fully managed service offering by AWS You may even look for a different way to solve the same problem. When coming across big migration tasks such as these, it’s always a good idea to take a look at other alternatives which may be more suitable or easier to implement. Our database needed to be migrated to a new version which is not supported by our current implementation of Airflow. Several operators and utility functions that our team defined used features that were refactored or no longer supported. However, back in 2020 as the new Airflow v2 was released our team began facing problems trying to migrate to the newest version: We self-host the tool through Kubernetes. Here at Sumo, our team has been using this technology for several years to manage various jobs relating to our organization’s Global Intelligence, Global Confidence, and other data science initiatives. Apache Airflow is an open-source orchestration platform that enables the development, scheduling and monitoring of tasks using directed acyclic graphs (DAGs).

0 kommentar(er)

0 kommentar(er)